- Solutions

- Use cases

- In-cabinEye gaze on road, hands on wheel, drowsy driver.

- Smart-officeAttention analysis, room interaction, engagement analysis.

- Fitness applicationsAvatar creation, hand tracking recognition, egocentric recognition.

- SecurityFalling people, package delivery, person detection.

- CosmeticsFacial recognition, head pose estimation, eye gaze estimation.

- Facial applicationsFacial recognition, virtual try-on, IoT and more.

- { Documentation }

- Resources

- Company

- Solutions

- Our Data

- Platform

- API

- Industries

- In-cabin

- Smart-office

- Security

- Fitness applications

- Cosmetics

- Facial applications

- Use cases

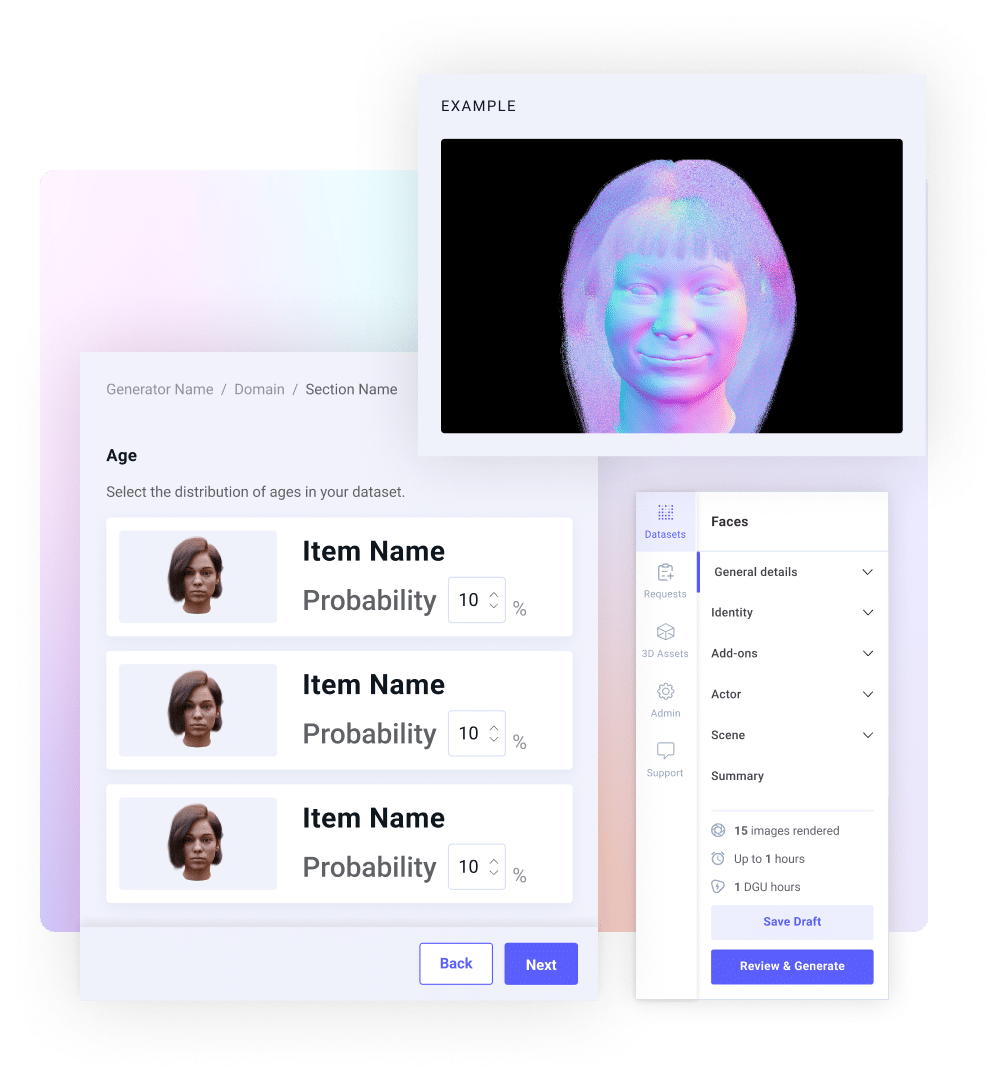

Platform

Easy data management

at your fingertipsYour visual assistant to generation at scale

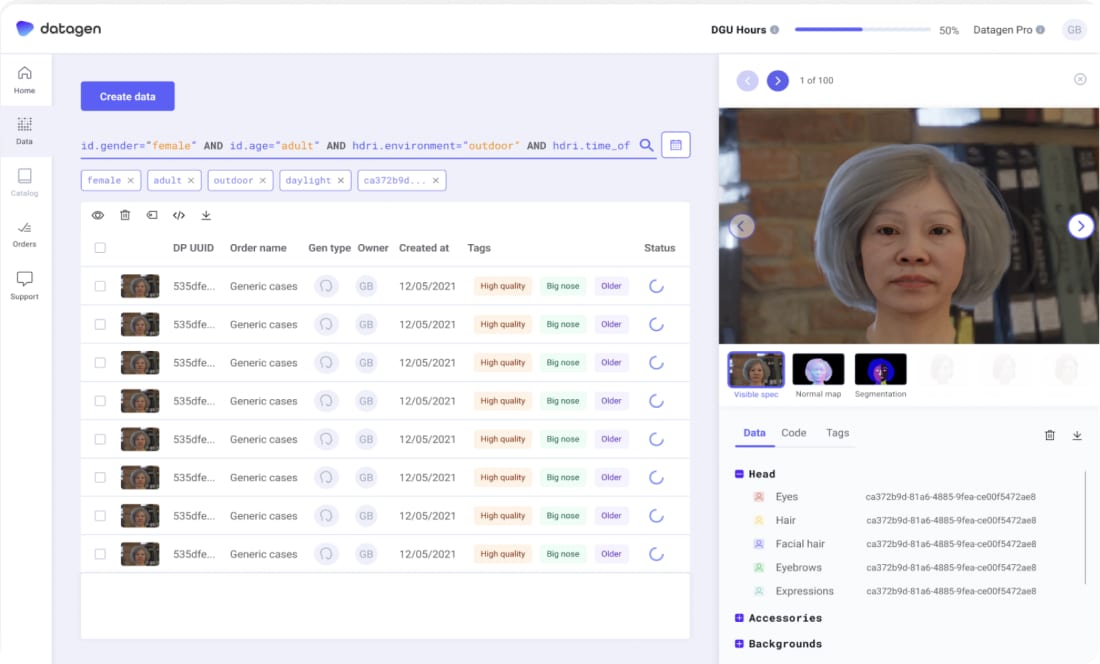

Scaling up data generation can present challenges, particularly when it comes to managing tracking, and visualizing the full range of options available to you through an API. The Datagen Platform complements our API by allowing you to easily preview the various assets, generate in a lightweight, iterative way, and retrieve previously generated data.

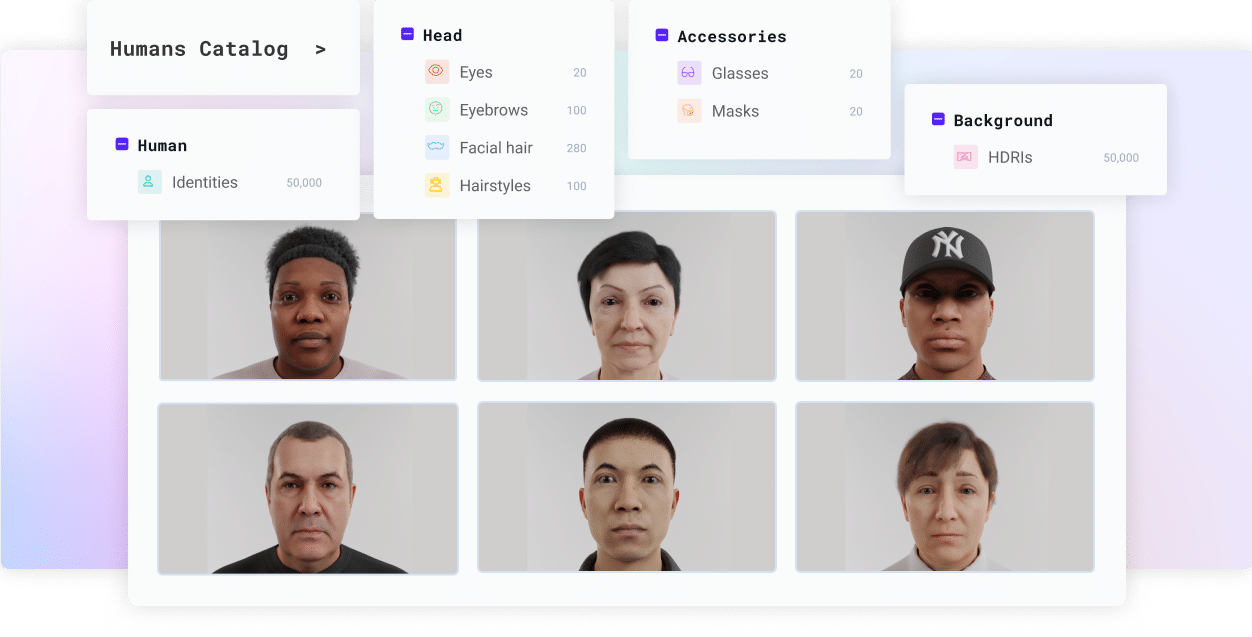

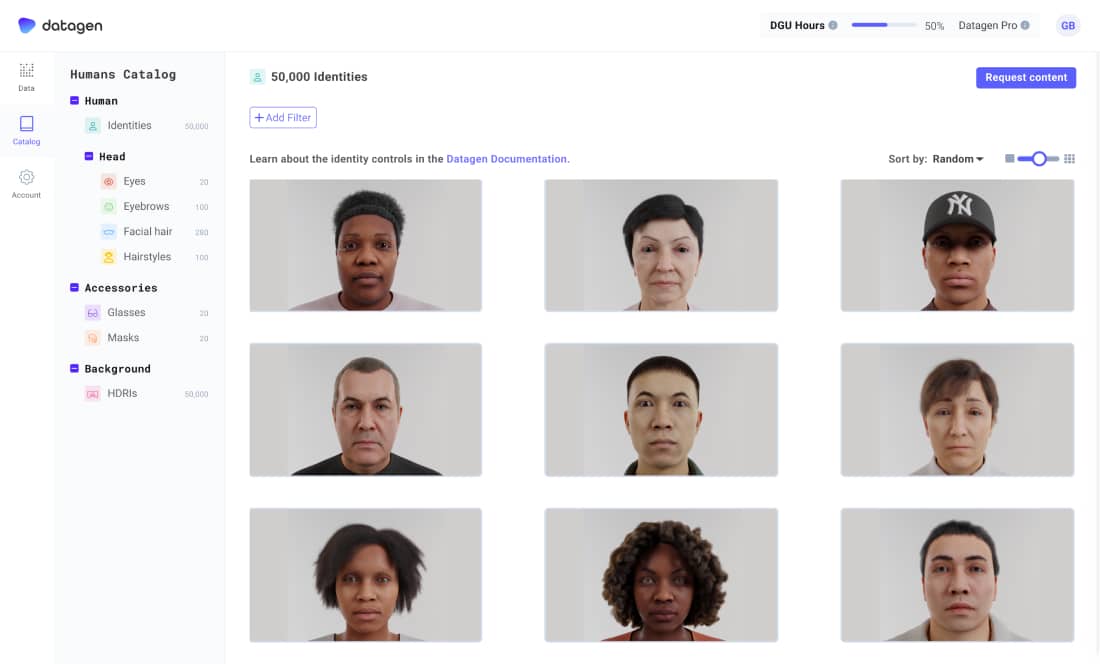

Browse and select

Our platform includes a catalog of all identities and assets. Browse through the options to select the exact data you need and import it into our API.

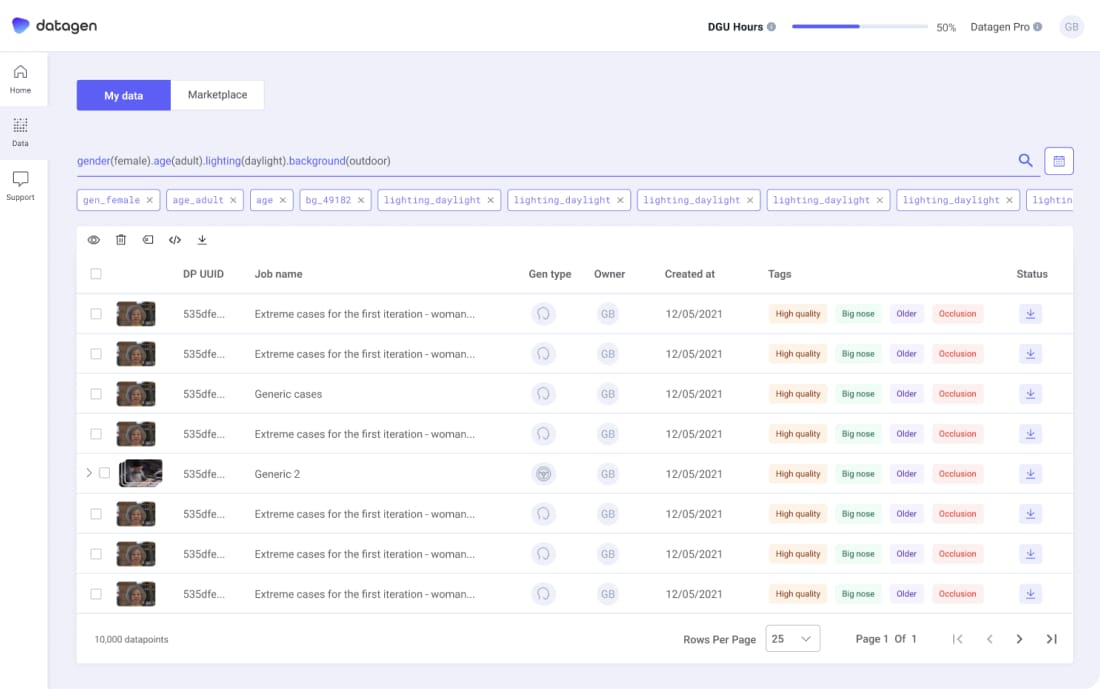

Manage and share

Manage and share synthetic data within your team or company with ease. With the platform’s intuitive interface, you can quickly query to find data points of specific characteristics whether it was generated by you or others on your team.

Generate quickly and easily

The platform allows you to quickly generate data before diving into our API and SDK. Choose the humans and/or behaviors that you want to generate and get your data with the click of a button.

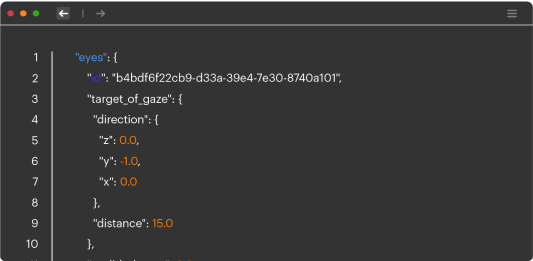

To generate data on a large scale with granular control over every parameter, the API is the solution for you.I think the quality of the data, sophistication,

and the platform looks really good. So kudos on that. That’s amazing.Viral Carpenter,Product Manager at GoogleGenerate data with API

Manage and share synthetic data within your team or company with ease. With the platform’s intuitive interface, you can quickly query to find data points of specific characteristics whether it was generated by you or others on your team.

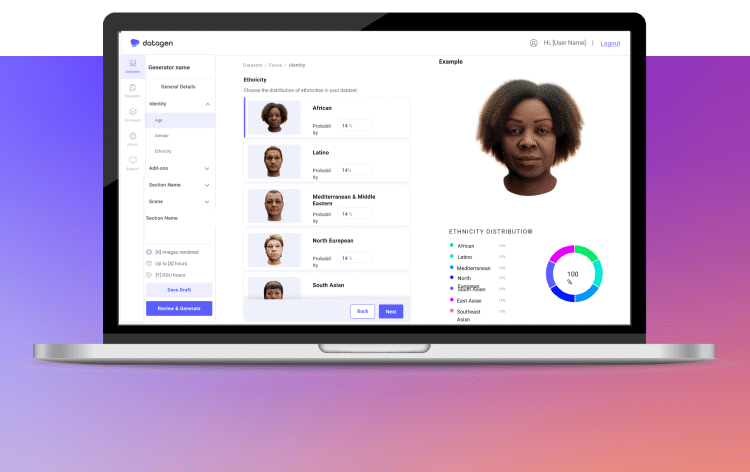

Our data

100k+ Unique Identities, perfect ground truth, high domain variance, everything you need to train, test and cover data gaps in your computer vision model.

We care about your data

And we would love to use cookies to make your experience better. Our privacy policy.

Contact us - Solutions

Learn more about synthetic dataNew to computer vision? - Company

Dev resourcesGet started with our code templates - Resources

Our data tailored for your industryChoose your computer vision taskFace and hair segmentationDesign the exact dataset you need for your computer vision task.

Our dataFull body humans in contextGenerate fully annotated, pixel perfect full body humans and realistic scenes of humans interacting with objects in various environments.Data generation and managementAPIOur API enables you to request the exact dataset you need for your use case by giving you full control over single parametres through code.PlatformOur platform enables you to manage, preview and share your data within your team.